Generative AI programs like ChatGPT, DALL-E, Bing Chat, and others are powerful tools that offer instructors and researchers a variety of new opportunities.

To harness the power and potential of Artificial Intelligence, you must also consider how and when the capabilities of these tools best meet your needs. Just like you wouldn’t use a calculator to proofread an essay, generative AI tools can’t fix every problem. But when used strategically, these tools can be powerful. On this page, we provide some strategies for addressing the following questions:

- What are some of the strengths and limitations of generative AI?

- When and how should I use generative AI?

Perhaps the most important step in using generative AI is to first learn how to appropriately and effectively use these tools, which includes developing skills for effectively writing prompts—also known as prompt engineering. This page offers some initial guidance for using generative AI tools. We also provide additional resources for developing your competencies, including our page on prompt patterns and a free, self-paced course on Prompt Engineering taught by Vanderbilt University Professor of Computer Science Jules White.

What are the strengths and weaknesses of generative AI?

To understand the strengths and weaknesses of generative AI, it is worth keeping in mind how AI works. Generative AI is essentially a very advanced pattern recognition software. Programs like ChatGPT analyze large sets of data and produce unique outputs that mimic the patterns identified in their data. Because of this, generative AI programs are great at recognizing patterns, but they may be less skilled at producing factually-sound output. Additionally, the quality of the output a generative AI program generates depends heavily on the quality of your prompt. When writing prompts, consider how you might capitalize on generative AI’s strengths while minimizing its weaknesses.

The lists below, while not exhaustive, highlights some key strengths and weaknesses of generative AI programs.

Strengths of generative AI

Weaknesses of generative AI

When and how should I use generative AI?

To reap the full benefits of generative AI, it is worth considering both why you want to use it and how you will use it.

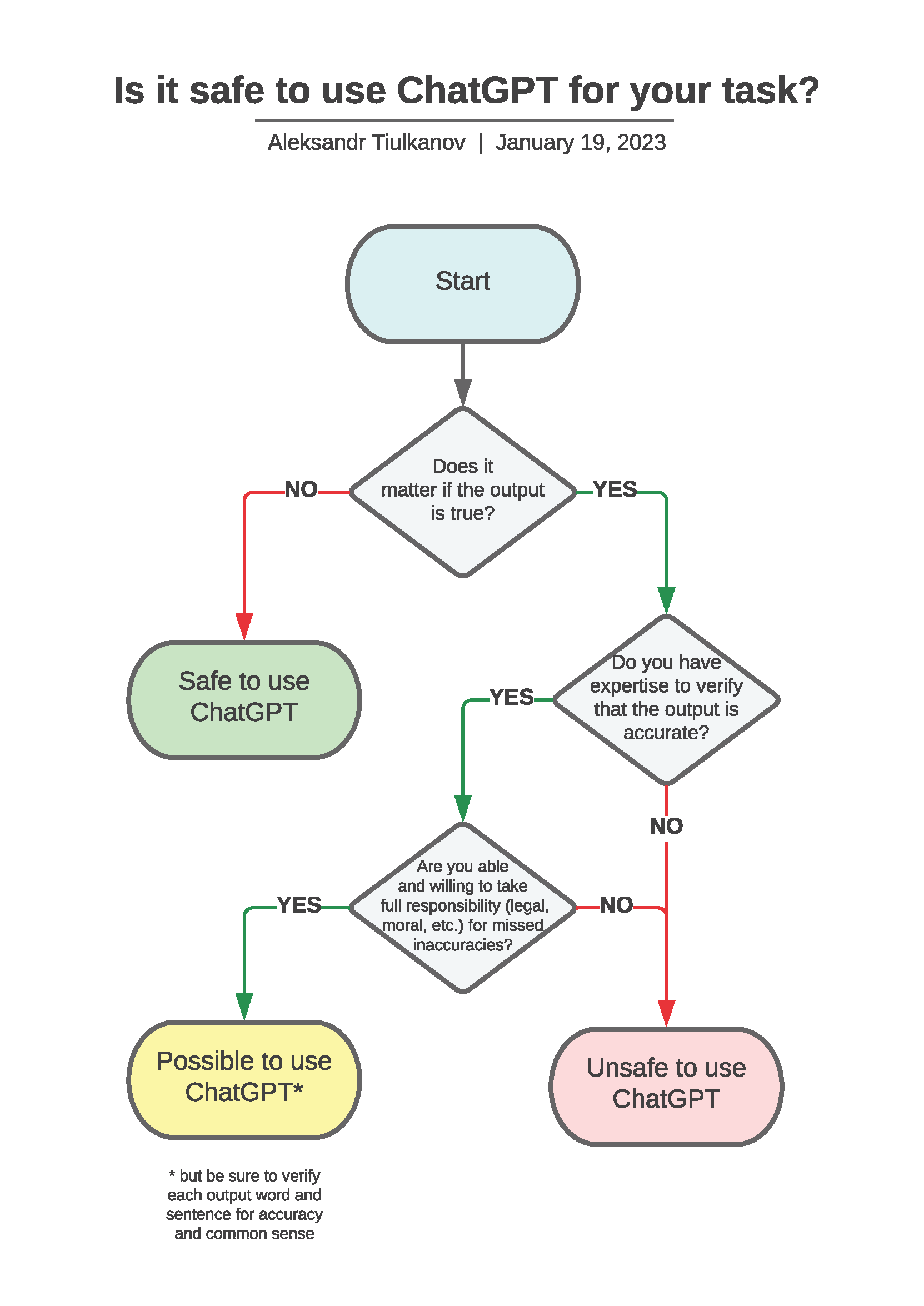

As a starting point, this flow chart¹ can help you determine if generative AI is an appropriate option for you.

As you begin a project or task that incorporates generative AI, consider building a strategy based on the following questions:

- What is my purpose and goal in using generative AI? Do you want to make a certain process more efficient? Fill a knowledge gap? Generate new ideas?

- Who is my audience? What are their attitudes towards the use of generative AI? What expectations do they have about the use of generative AI and citation practices? How can I use these tools to meet the needs and/or expectations of my audience?

- What kind of information will I be sharing with generative AI? Am I okay with this information potentially being shared or circulated? Am I sharing information that is subject to certain privacy restrictions such as FERPA or HIPPA?

- What specific information or product do I want to get from generative AI? Do I want to generate brainstorming questions? Do I want it to generate a polished version of a piece of writing?

- How will I prompt AI in a way that generates the output I want? How might generative AI misunderstand my request? What kinds of biases might the AI generate, and how can I adjust my prompt to mitigate these biases?

- How will I revise and review the output that AI generates? What kinds of errors or limitations do I want to pay close attention to? How will I distinguish between helpful and unhelpful output?

-

Flowchart created by AI and Data Policy Lawyer Aleksandr Tiulkanov

-

Additionally, the following works were consulted in the development of this webpage. For additional perspectives on these topics, we encourage you to review the following sources.