Davalos, Eduardo, Zhang, Yike, Srivastava, Namrata, Salas, Jorge Alberto, McFadden, Sara E., Cho, Sun-joo, Biswas, Gautam, & Goodwin, Amanda P. (2025). “LLMs as educational analysts: Transforming multimodal data traces into actionable reading assessment reports.” In Lecture Notes in Computer Science (Vol. 15878, pp. 191-204). https://doi.org/10.1007/978-3-031-98417-4_14

Reading assessments are important for improving students’ understanding, but many educational technology tools focus mostly on final scores, offering little insight into how students actually read and think. This study explores using multiple types of data—including eye-tracking, test results, assessment content, and teaching standards—to gain deeper insights into reading behavior. We use unsupervised learning techniques to identify distinct reading patterns, and then a large language model (LLM) summarizes this information into easy-to-read reports for teachers, simplifying the interpretation process. Both LLM experts and human educators evaluated these reports for clarity, accuracy, relevance, and usefulness in teaching. Our results show that LLMs can effectively act as educational analysts, turning complex data into insights that teachers find helpful. While automated reports are promising, human oversight is still necessary to ensure the results are reliable and fair. This work moves human-centered AI in education forward by connecting data-driven analysis with practical classroom applications.

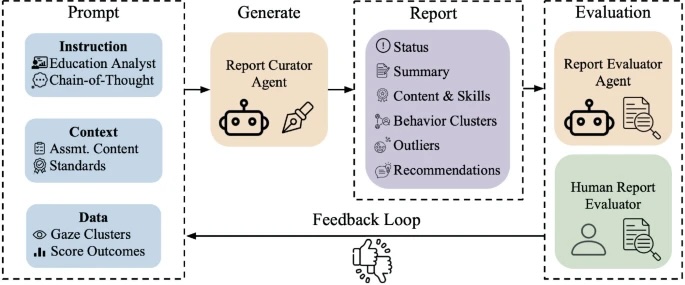

Fig.1. Proposed Pipeline for LLM-Driven Assessment Report Generation: By instructing LLMs to role-play as an educational analyst and providing assessment context and data, we construct a prompt that is used to generate a teacher-oriented assessment report