Ni, Bo; Wang, Yu; Cheng, Lu; Blasch, Erik; Derr, Tyler. “Towards Trustworthy Knowledge Graph Reasoning: An Uncertainty Aware Perspective.” Proceedings of the AAAI Conference on Artificial Intelligence 39, no. 12 (2025): 12417–12425. https://doi.org/10.1609/aaai.v39i12.33353.

Recently, researchers have combined two powerful tools—Knowledge Graphs (which organize information like a big map of facts) and Large Language Models (which generate human-like text)—to make these models smarter and reduce their mistakes. This approach helps the models reason better by checking facts from the knowledge graph.

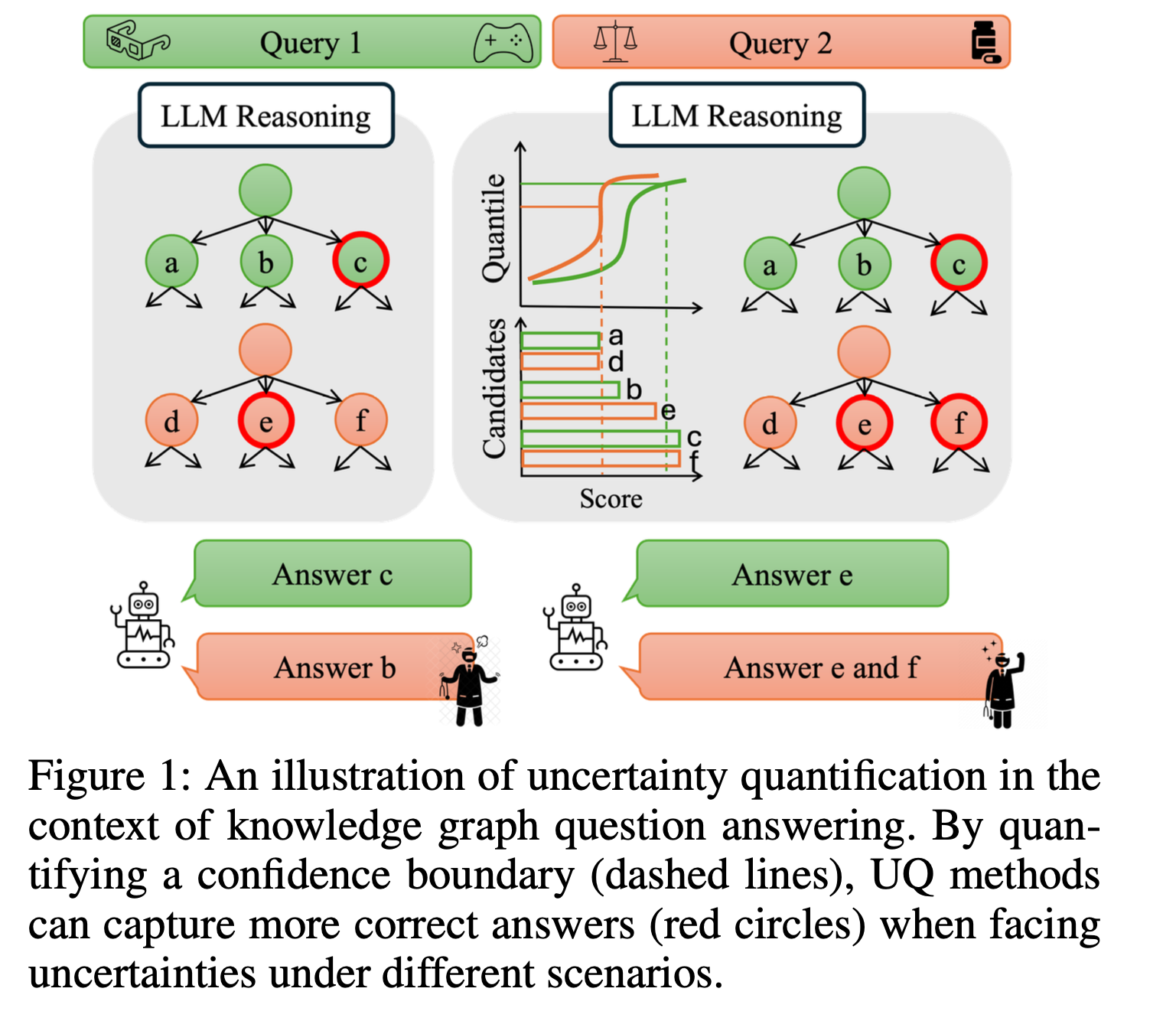

However, these combined systems still struggle with knowing how confident they should be in their answers. This is important because in many real-world situations, making a wrong decision can be costly or harmful. It’s hard to add this “uncertainty awareness” because the system’s parts are complex and interact in complicated ways.

To solve this problem, a new method called UAG (Uncertainty Aware Knowledge-Graph Reasoning) was created. UAG helps the system not only give answers but also say how confident it is in those answers. It uses special techniques to keep errors under control while improving accuracy. Tests show that UAG can reliably meet confidence goals and make predictions more precise, reducing unnecessary guesses by about 40% compared to older methods.