Deng, Ruining; Cui, Can; Liu, Quan; Yao, Tianyuan; Remedios, Lucas W.; Bao, Shunxing; Landman, Bennett A.; Wheless, Lee E.; Coburn, Lori A.; Wilson, Keith T.; Wang, Yaohong; Zhao, Shilin; Fogo, Agnes B.; Yang, Haichun; Tang, Yucheng; Huo, Yuankai. “Segment Anything Model (SAM) for Digital Pathology: Assess Zero-shot Segmentation on Whole Slide Imaging.” IS and T International Symposium on Electronic Imaging Science and Technology 37, no. 14 (2025): COIMG-132. https://doi.org/10.2352/EI.2025.37.14.COIMG-132.

The Segment Anything Model (SAM) is a cutting-edge AI tool developed for image segmentation—the task of identifying and outlining specific parts of an image. Trained on over 1 billion masks from 11 million licensed and privacy-respecting images, SAM is designed to work without needing extra training for new tasks. It can segment images using different types of prompts, such as clicks, boxes, or existing masks. This makes SAM especially promising for use in medical image analysis, like digital pathology, where labeled training data is often limited.

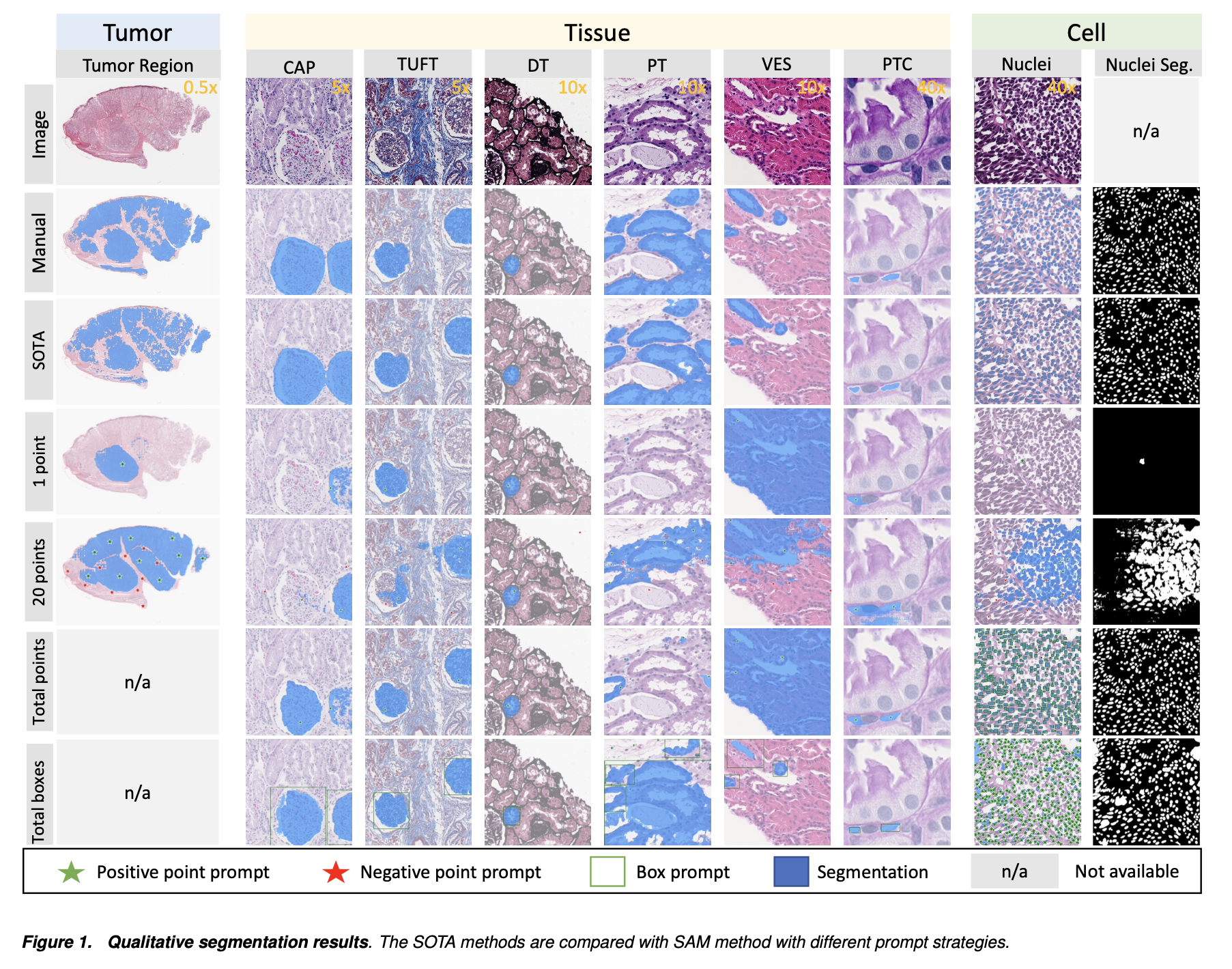

In this study, researchers tested SAM’s ability to perform “zero-shot” segmentation (i.e., without additional training) on whole slide images (WSIs), which are large, high-resolution images used in pathology. They evaluated the model’s performance on three common tasks: segmenting tumors, identifying non-tumor tissue, and detecting individual cell nuclei. The results showed that SAM works well when segmenting large, connected objects like tumors, but it struggles with dense object segmentation—such as distinguishing many small cell nuclei—even when given up to 20 prompts per image.

The study also identified several limitations of SAM in the context of digital pathology: (1) it has difficulty handling very high image resolutions typical of WSIs, (2) it struggles with objects at multiple scales, (3) its performance can vary depending on the type and quality of the prompts provided, and (4) it lacks optimization for medical images without additional fine-tuning. The researchers suggest that “few-shot” fine-tuning—where the model is slightly retrained using a small set of task-specific medical images—could help improve its ability to accurately detect smaller, densely packed objects in medical settings.