Cui, Can; Deng, Ruining; Guo, Junlin; Liu, Quan; Yao, Tianyuan; Yang, Haichun; Huo, Yuankai. “Enhancing Physician Flexibility: Prompt-Guided Multi-class Pathological Segmentation for Diverse Outcomes.” BHI 2024 – IEEE-EMBS International Conference on Biomedical and Health Informatics, Proceedings (2024). https://doi.org/10.1109/BHI62660.2024.10913563.

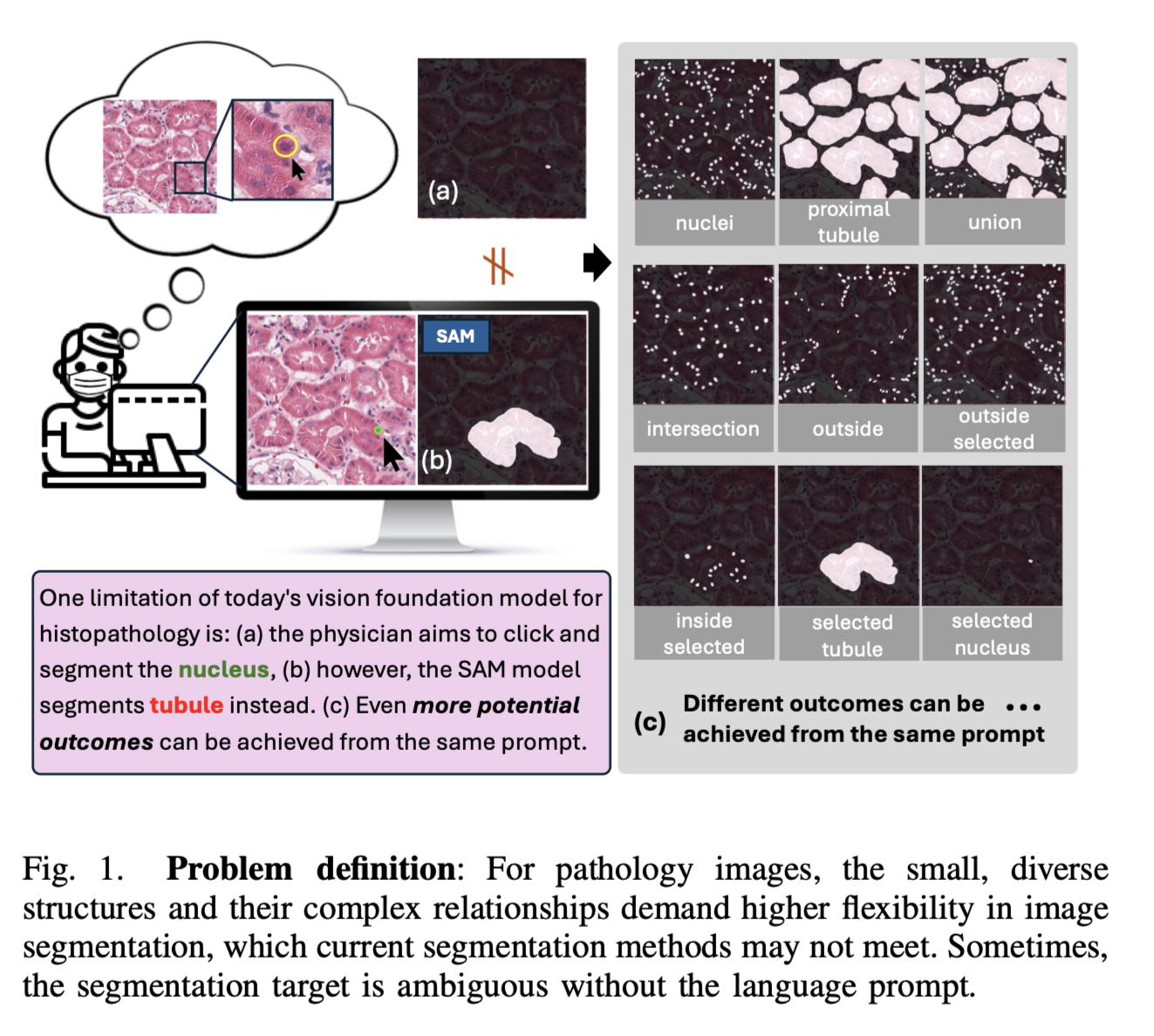

The Vision Foundation Model has recently shown promise in analyzing medical images, especially because it can often work right away without needing to be retrained—this is known as zero-shot learning. This makes it easier and faster to apply AI in healthcare. However, when it comes to segmenting (or outlining) different parts of medical images, like diseased tissues in pathology slides, things get tricky. A simple click on a large image might point to something small like a single cell, or something big like a full tissue layer—so the AI needs to be very flexible in what it can detect.

Most current models can predict general results, but they aren’t very good at adjusting to what a doctor specifically wants to look at. In this study, we tested whether using a Large Language Model (LLM) to guide the image analysis with different written instructions (called prompts) could make these models more adaptable, compared to traditional methods that rely on fixed task labels.

Our work includes four key contributions:

- We built an efficient system that uses customized language prompts to help the AI flexibly identify different structures in medical images.

- We compared how well the model performs when given fixed prompts versus more natural, free-text instructions.

- We created a special dataset of kidney pathology images along with a variety of free-text prompts tailored to those images.

- We tested how well the model could handle new and different cases during analysis.

Overall, our approach shows that allowing doctors to guide the AI with flexible language prompts could make medical image segmentation more accurate and user-friendly.