PSY-PC 3190: Language and the Brain

Deborah Levy working with

Deborah Levy working with

James Booth, Professor of Psychology and Human Development

Problem

A difficult trade-off in scientific writing is the balance between accessibility and accuracy; scholarly science writing is often so meticulously accurate that it becomes inaccessible, while popular science writing is often so accessible that it can’t be accurate. The ability to effectively read, evaluate, and create scientific writing in all of its forms – scientific literacy – is crucial for engaging with scientific content in the real world.

Due to recent increases in media coverage of neuroscience, as well as mounting controversy surrounding public faith in science at large, it is extremely important for young adults studying psychology and/or neuroscience in particular to become critical and confident creators and consumers of scientific literature. However, instructor assessment and student self-report both suggest that students’ abilities at confidently evaluating scientific writing remain underdeveloped (Hubbard & Dunbar, 2017; Snow, 2010).

In the hopes of increasing scientific literacy among such undergraduates, we created an online module to emphasize critical skills in scientific reading and writing for students taking PSY-PC 3190, “Language and the Brain”.

Approach

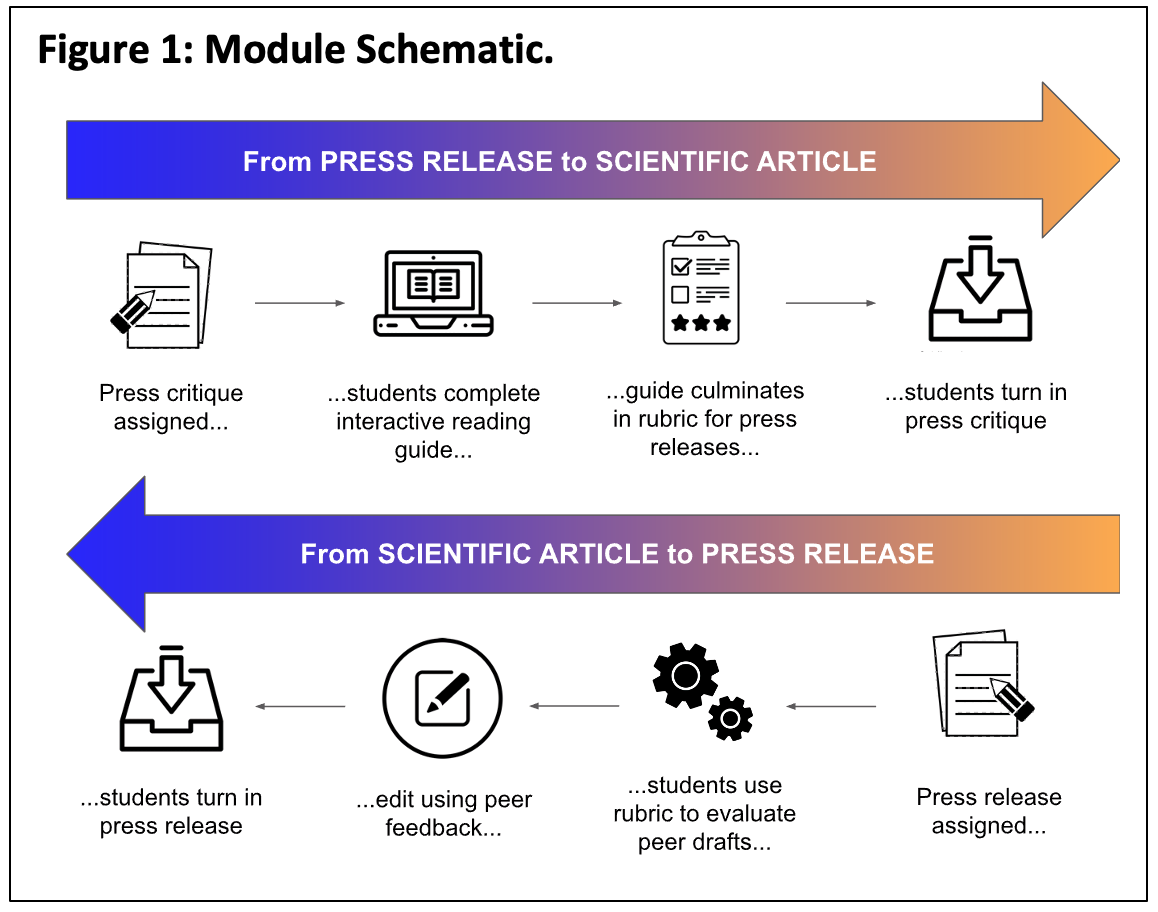

We created a multi-part online module to build critical skills in scientific reading and writing for students taking the Fall 2020 course. The online module consisted of (1) an interactive reading guide, (2) a rubric for evaluating press releases, and (3) an online system for anonymous peer review, used in conjunction with a set of assignments in the course (see Figure 1). The module’s efficacy was assessed using pre- and post-semester surveys in which students evaluated their comfort with, confidence in, and ability at reading and writing about scientific topics. Surveys were administered at the end of the Fall 2019 semester, at the beginning of the Fall 2020 semester, and at the end of the Fall 2020 semester.

Interactive Reading Guide

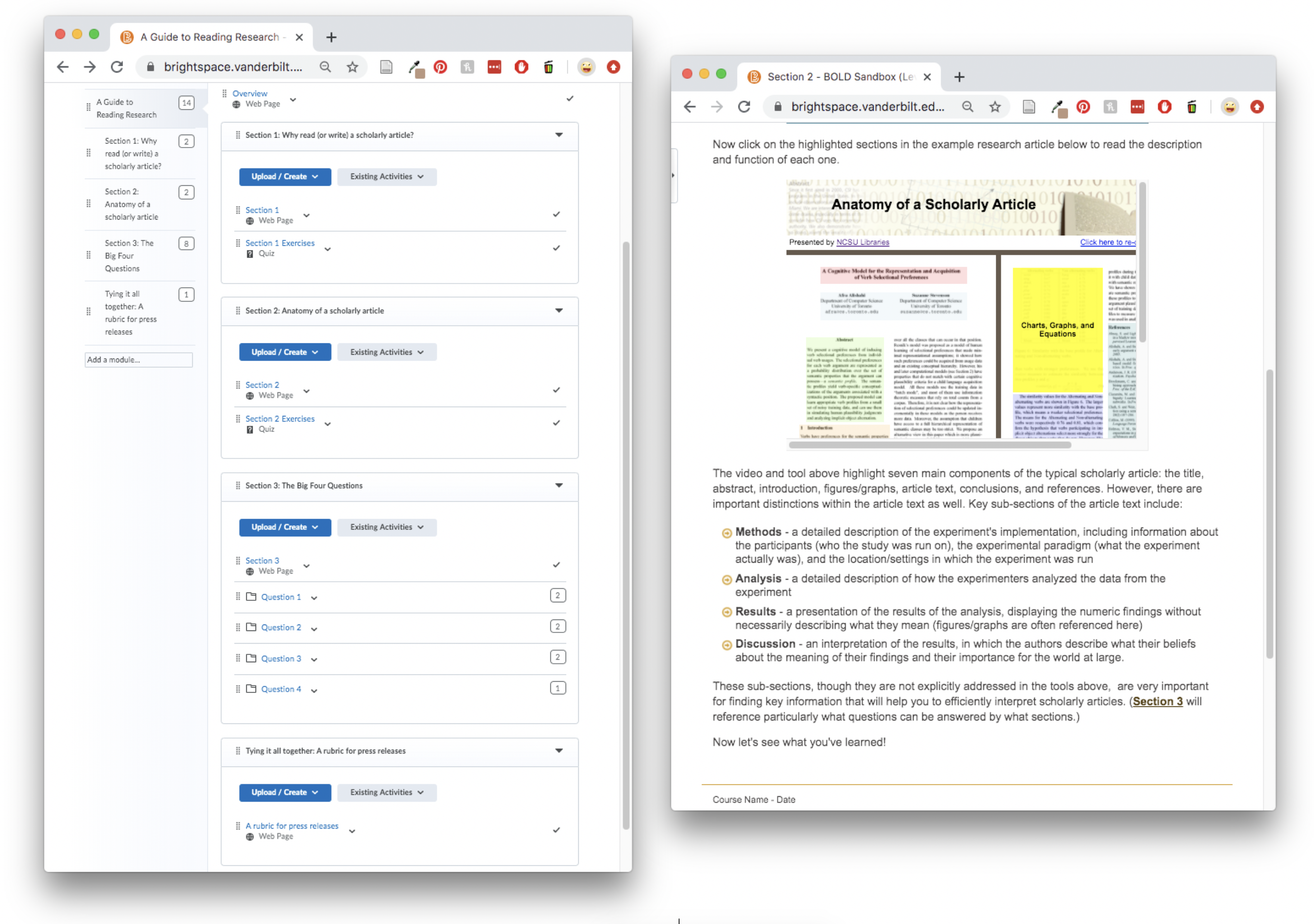

The interactive reading guide, implemented in Brightspace, introduces students to basic concepts of scientific writing, emphasizing differences between scholarly and non-scholarly sources. Each section of the guide includes a visually-aided description of the skill being emphasized, along with a brief, formative assessment to encourage active learning and help students to monitor their understanding.

Rubric for Evaluating Press Releases

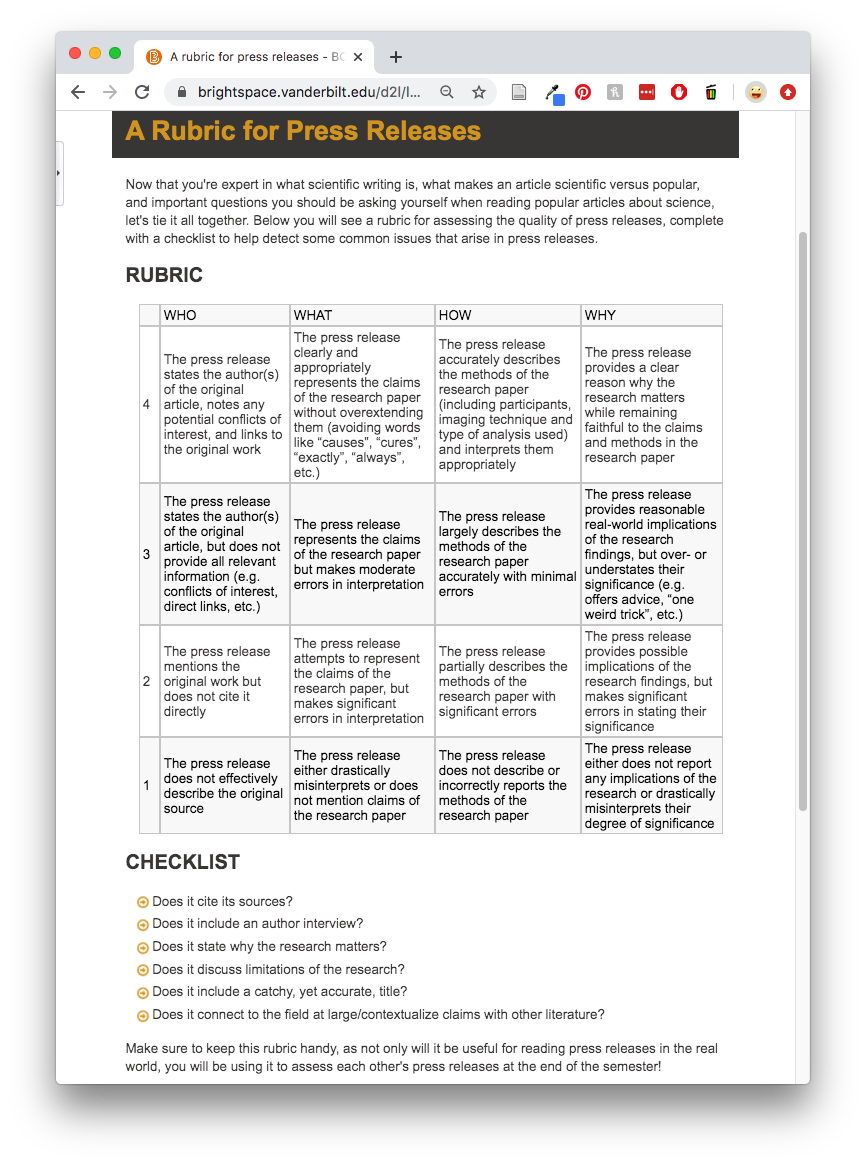

The conclusion of the reading guide is a section entitled “Tying it all together: A rubric for press releases”, which synthesizes the information covered in the earlier sections and provides students with a simple tool for quickly assessing the quality of popular science writing. Its primary purpose is to serve as the rubric for peer evaluation; however, it can also serve a handy tool with which to evaluate scientific writing encountered “in the wild”.

Anonymous peer review

After completing a first draft of their press release assignment, students will review each other’s drafts on Teammates, where they be anonymously assessed by three randomly assigned peers using the above rubric. Students will then have the opportunity to incorporate the feedback they were given before turning in the edited assignment to their instructor.

2020 versus 2019 semester results

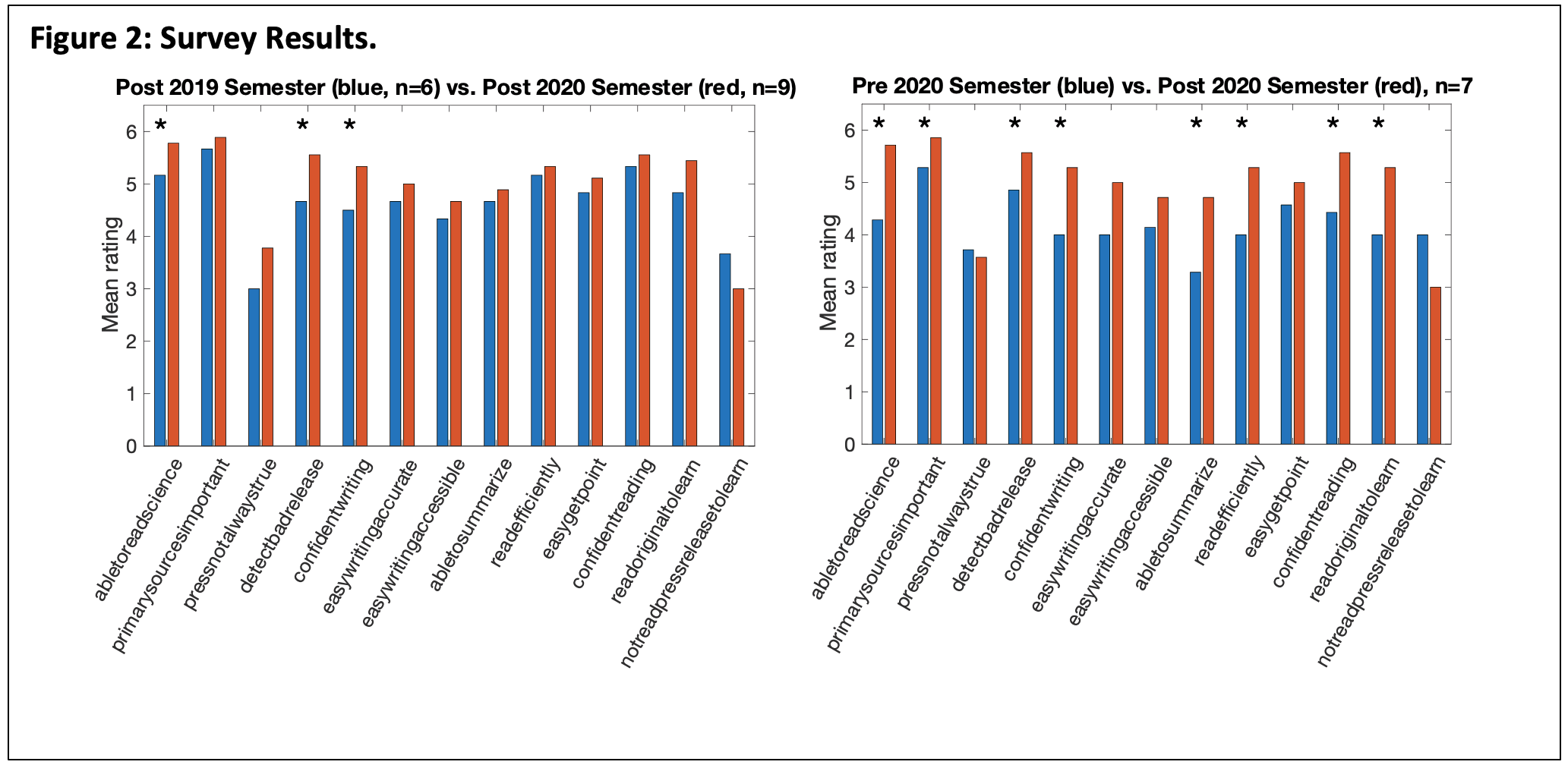

Independent sample t-tests revealed improvements in three distinct measures of scientific literacy after the 2020 versus the 2019 semester (* p<.05, one-tailed). Students in the 2020 cohort, who completed the module, compared to the 2019 cohort, who did not, felt more able to read and evaluate articles about science in the popular press, showed a lesser tendency to trust low-quality press releases, and felt more confident in their ability to write about scientific topics (see Figure 2 left). In addition, there were improvements pre- and post-survey in the Fall of 2020 in eight distinct measures of scientific literacy (* p<.05, one-tailed), namely: self-perceived ability to read and evaluate scientific writing; perceived importance of consulting primary sources; less trust in poorly written press releases; confidence in writing about scientific topics; self-perceived ability to summarize research articles; self-perceived knowledge of how to efficiently extract information from research articles; confidence in reading research without guidance; and likelihood of reading a research article to learn about a scientific topic (see Figure 2 right). Qualitative student feedback underscored these quantitative results, indicating that the module was “intuitive, helpful, informative, interactive and enjoyable”. Although student feedback provided insight into areas of improvement, the SciComm module was well-received by students, as indicated by positive self-reported experiences (all components rated 4/6 or higher on average).

Conclusion

Overall, we found that completion of our multi-component, interactive online module was associated with significant improvements in scientific literacy among students of Language and the Brain. These findings, together with qualitative student feedback, suggest that asynchronous online activities can be both enjoyable and useful for fostering crucial scientific literacy skills.

Citations

- Hubbard, K. E., & Dunbar, S. D. (2017). Perceptions of scientific research literature and strategies for reading papers depend on academic career stage. PLOS ONE, 12(12), e0189753. https://doi.org/10.1371/journal.pone.0189753

- NCSU Libraries (2009). Anatomy of a Scholarly Article. https://www.lib.ncsu.edu/tutorials/scholarly-articles/

- Snow, C. E. (2010). Academic language and the challenge of reading for learning about science. Science (New York, N.Y.), 328(5977), 450–452. https://doi.org/10.1126/science.1182597