Evaluation Reevaluation

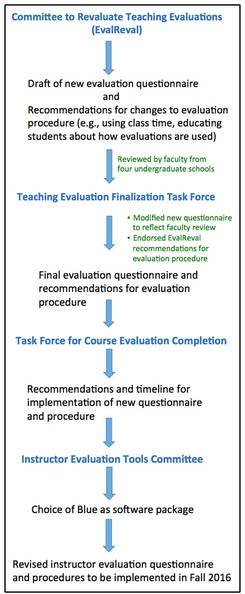

In Fall 2016, the undergraduate schools at Vanderbilt, along with the Divinity School and the Graduate School, moved to a mobile-friendly course evaluation system with new questions. This was the first significant revision to course evaluation questions in more than 20 years. These changes were based on the work of four committees with faculty and student representation, and were vetted by the faculties of the four undergraduate schools. Initial recommendations were made by the Committee to Reevaluate Evaluations in October 2014.

In Fall 2016, the undergraduate schools at Vanderbilt, along with the Divinity School and the Graduate School, moved to a mobile-friendly course evaluation system with new questions. This was the first significant revision to course evaluation questions in more than 20 years. These changes were based on the work of four committees with faculty and student representation, and were vetted by the faculties of the four undergraduate schools. Initial recommendations were made by the Committee to Reevaluate Evaluations in October 2014.

Feedback was gathered from faculty within each of the four undergraduate schools in Spring 2014 and incorporated into the design of the evaluation questions by the Teaching Evaluation Finalization Task Force in Summer 2015. During Spring 2016, the Task Force for Course Evaluation Completion reviewed the work of the other groups and made recommendations for implementation of the new system in Fall 2016, and the Instructor Evaluation Tools Committee identified a software application calibrated to support the university’s evaluation goals.

The reports of these groups are linked below and a more detailed description of the revision process is found in MyVU.

Committee to Reevaluate Teaching Evaluations report

Teaching Evaluation Finalization Task Force report

Task Force for Course Evaluation Completion

- What motivated the changes?

- How did the course evaluation process change?

- How were the questions on the evaluation questionnaire changed?

- Will this change faculty evaluations?

- Why is it better to include time in class for student evaluations?

- What are recommended strategies to increase student completion?

What motivated the changes?

The overall goals of the changes are to improve the presentation, design, ease-of-use, and content of the evaluation as well as to foster a campus culture that values student evaluations as part of a wider discourse and array of information about teaching.

Since Vanderbilt moved away from pencil-and-paper student evaluations, the percentage of students participating in the evaluation process (the response rate) has dropped dramatically—roughly 25% since 2004, resulting in a response rate of 58% in 2015. The EvalReval committee identified three primary purposes for course evaluations, summarized here:

- To provide student feedback to the faculty on the pedagogy and delivery of instruction.

- To inform departments and administration about faculty teaching in tenure, promotion, and reappointment.

- Since the implementation of Voice View in 2012, student evaluations also provide students with data to inform course and instructor selection.

Low response rates make course evaluations less valid measures for any of these three purposes. If a minority of students within a course provide evaluations, it is not possible to know whether the feedback provided accurately reflects the experience of a larger fraction of the students within the course. Not only does this lower response rate reduce instructors’ ability to use the evaluation process for formative purposes, it also decreases the validity of the evaluations as tools for administrative review and student choice.

The decline in response rate at Vanderbilt mirrors similar declines at institutions across the nation, and institutions have developed various responses, including eliminating the student evaluation process. The EvalReval committee identified several ways to improve the course evaluation process at Vanderbilt, thereby allowing it to better achieve the goals of formative and summative evaluation.

How did the course evaluation process change?

The Committee to Reevaluate Evaluations identified several perceived barriers to completion of student evaluations.

- Some of the questions in the evaluation questionnaire were poorly-worded, unclear, or vague. This problem may have contributed to low response rates and decreases the value of the feedback for formative or evaluative purposes.

- Students often do not understand how instructors use evaluations to improve their teaching or how administrators use them to make promotion and retention decisions.

- Students may hold the misconception that faculty may see evaluations before grades are submitted.

- Students may be concerned that faculty can identify individual students’ responses.

- Evaluations are requested at a particularly busy time in the semester, and students often do not have time to complete forms for all classes.

The following changes were implemented to address these barriers.

- The evaluation questionnaire has been revised to provide clearer questions for students and more useful feedback for faculty and administrators.

- The revised evaluation questionnaire is accessed via Blue, a mobile-, tablet-, and laptop-compatible application that is more consistent with current technology norms than VOICE, Vanderbilt’s previous course evaluation tool.

- Faculty are encouraged to address the evaluation process with students, through classroom discussion and/or the syllabus. Suggestions for how to do this are provided below.

- Faculty are encouraged to allot ~20 minutes of class time for students to complete evaluations.

- VSG and administrators will communicate with students about the student evaluation process each semester, and other avenues will be used as appropriate (e.g., Vanderbilt Visions and the Hustler).

- There will be ongoing assessment of both student and faculty satisfaction with the new software and course evaluation form.

How were the questions on the evaluation questionnaire changed?

The EvalReval committee revised the evaluation questionnaire with attention to clarity, length, and the ability to foster formative assessment. To this end, the group reviewed teaching evaluation materials from a variety of sources (described in Appendix 1 in their report) and identified eight dimensions of instruction that are commonly evaluated:

- instructor clarity;

- teacher-student interaction, rapport, and accessibility;

- instructor’s stimulation of interest in the course and subject matter;

- instructor’s feedback on student performance;

- course organization and planning;

- intellectual challenge and critical thinking;

- course workload and difficulty;

- student self-rated learning.

Based on these dimensions and input from student focus groups and faculty members across the university, the EvalReval committee created a revised evaluation questionnaire. This revised evaluation questionnaire was reviewed by faculty within each of the university’s four undergraduate schools, and the Teaching Evaluation Finalization Task Force incorporated this feedback into the final form. Importantly, the Finalization Task Force identified two changes to enhance the utility of the form for administrative evaluation purposes (i.e., decisions on promotion and tenure):

- the addition of a fixed-choice item on student self-evaluation of learning

- a rating scale for administrative items that corresponds to Vanderbilt’s previous questionnaire, in part to minimize discontinuity with the previous evaluation questionnaire. More specifically, the items assessing the overall quality of the instructor and the course will have the following five response options: poor, marginal, average, very good, excellent.

The Finalization Task Force’s work resulted in the revised questionnaire that consists of 15 standard fixed-choice questions and three open-ended questions that are designed to address eight key dimensions of instruction, can provide useful formative feedback for instructors, and can be used effectively in administrative review processes.

Will this change faculty evaluations?

The two questions that have traditionally been used for formal evaluation purposes broadly across campus (i.e., overall rating of instructor and overall rating of the course) were retained in the revised evaluation questionnaire. Further, the scales used for these items did not change. Some units have also traditionally relied on a question about student self-evaluation of learning; this question is also included in the revised questionnaire. Thus the revised evaluation questionnaire allows continuity in the faculty evaluation process.

Why is it better to include time in class for student evaluations?

One of the challenges presented by the move to online evaluations is that student response rates declined sharply. One way to respond to this challenge is to reserve time in class for students to complete course evaluations. By setting aside 20 minutes during class for students to complete course evaluations, instructors are not only increasing the overall student response rates, but they are also increasing the likelihood that students have time to think through their responses. As a result, students will have the opportunity to produce less rushed, more thoughtful feedback, especially if this strategy is combined with the other recommended strategies below. Using class time thus may be a way for instructors to differentiate the type of serious, considered input appropriate for course evaluations from common brief and off-the-cuff input on social media, customer feedback, and other online forums. Finally, setting aside class time communicates to students the importance of evaluations in the teaching mission of the university.

Logistically, student responses would be saved, then students would be allowed to edit / revise / enhance up until the evaluation deadline, when all saved evaluations would be automatically submitted. It should also be noted that when setting aside time in class for students to complete course evaluations, instructors should leave the room to help ensure that students feel free to provide authentic responses.

What are recommended strategies to increase student completion?

Based on the various aforementioned Vanderbilt committee reports as well as the literature on student course evaluations, the CFT recommends the following action steps to increase not only student completion, but the quality and usefulness of student feedback:

- Designate time in class for students to complete evaluations, and let your students know why and when (see above).

- T ell your students that you value their honest and constructive feedback, and that you use student feedback to make improvements to your courses. If possible, share examples of how you have changed your courses as a result of student feedback.

- Let your students know that you are interested in both positive and negative feedback on the course. What aspects of the course and/or instruction helped them learn? What aspects might be changed to help future students learn more effectively?

- Describe the kind of feedback you find most useful. In most cases, specific feedback with examples is more useful than general statements. See the handout Providing Helpful Feedback to Your Instructors from the Center for Research on Learning and Teaching at the University of Michigan for examples of specific, constructive feedback.

- Remind students that evaluations are designed to be completely anonymous and that you will not be able to see any of their evaluations until after final grades have been submitted. Many students don’t realize these facts.

- Let students know that you are the primary audience for their feedback, but that others will potentially read their evaluations, including department and school administrators. Course evaluations play a role in personnel evaluations and in curriculum planning.

- Consider including language in your syllabus that addresses student evaluations. This alerts the students to the fact that they should also pay attention to their learning experiences throughout the semester and makes them more mindful of their responses in the course evaluations.